8th Wall Blog

Building the Next Generation of SLAM for the Browser

An inside look at 8th Wall’s approach to computer vision R&D

8th Wall's AR Engine offers developers a world-class SLAM system that runs directly in the browser, powering interactive WebAR experiences. The AR Engine is as fast as it is mighty—reaching desktop benchmarks of 300FPS. 8th Wall's SLAM system needs to be computationally efficient because it must be versatile, capable of running in any major browser on mobile phones, desktops, tablets, and more. For 2021, our engineering team was asked to take a deeper dive into the AR Engine, kicking off new initiatives this year aimed at making this SLAM system better, faster, and more powerful than ever.

SLAM systems let developers create World Effects where augmented content is placed in the user's world. These effects are possible using 8th Wall's hyper-optimized computer vision algorithms. These algorithms run on the user's device in the web browser using standard WebGL, WebRTC, DeviceMotion and WebAssembly APIs. A custom GLSL shading pipeline computes map points from the camera feed with subpixel accuracy. Then these points are triangulated into a 3D representation of the world. From this representation, algorithms estimate how the user is moving the phone on every frame, and a graphics engine applies the opposite motion to virtual objects to make them appear stable in the user's reality. Since these algorithms run on the device, the user's data is private and secure. Since computations run every frame, they must be very fast.

The first of 8th Wall’s 2021 SLAM improvements can be found in the new Release 16, which brings our biggest improvements to our AR Engine since we launched the world's first WebAR solution in 2018. Release 16 increases WebAR FPS on phones by up to 70% while simultaneously decreasing the pixel-level error by 50%. This means that with Release 16, our AR Engine is now both better and faster than ever! Getting these wins out of our already highly-optimized engine is a great credit to the engineers on our team, as well as 8th Wall's unique tools, technology, and approach to computer vision research and development.

Closing the Gap Between Computer Vision Research and Development

Computer vision can be a challenging specialty of computer science. Many standard algorithms are amenable to problem specification, analysis, and solution: once you have identified a problem and an approach, what remains is coding. In contrast, computer vision requires processing a high dimensional, rapidly changing stream of data with complex statistics, so it's rarely amenable to a first-principles approach. Computer vision research involves theory, unit tests, synthetic data, real world data, complex visualizations, hypothesis generation, validation and benchmarks. Even after all of that, translating research into a runtime environment can be a fraught endeavor. Mobile processors and GPUs can perform differently than desktop ones, code may have different performance characteristics on devices, and datasets may not cover a sufficient range of real world scenarios so that an algorithm that performs well in a research environment may fail spectacularly in the real world.

At 8th Wall, we bridge the gaps between research, development and deployment with an unconventional approach: we built our computer vision research platform from the ground up so that research code can directly be tested, iterated on, validated, and deployed. In practice, this means we avoid many traditional tools of computer vision research, like Matlab and NumPy, or UI frameworks that are tied to any particular platform. Instead, we focus on developing C++ code that can run on the desktop, native mobile apps, or the web. We build portable UI and graphics frameworks so that we can immediately jump from a visualization of synthetic or recorded data into a real world test on a real world device.

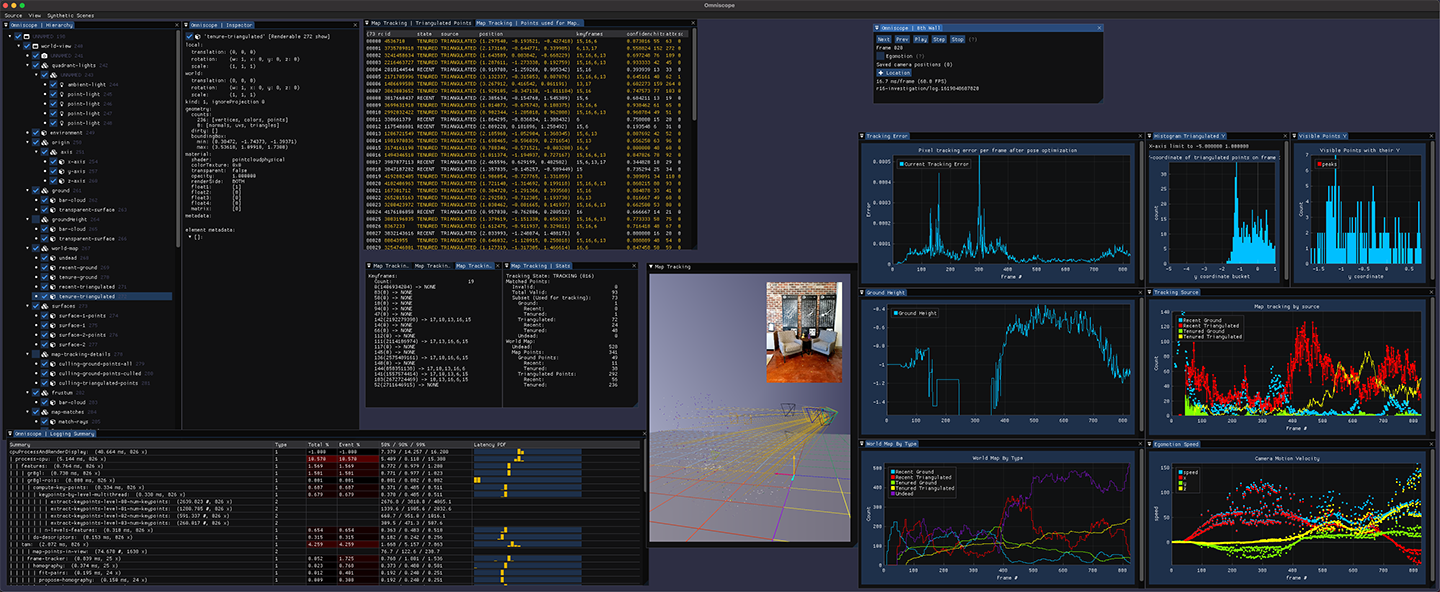

Developing and Visualizing Algorithms Using Omniscope

The heart of our computer vision research at 8th Wall is a framework called Omniscope, which allows us to develop and visualize algorithms and benchmarks on synthetic data or recorded data on the desktop, and then immediately view the same visualizations on real live camera data on the web or in a native app. This is achieved by writing backend visualization modules (or views) in C++ that can then be displayed by many different frontends. Omniscope views operate on the typical lifecycle of a camera application frame, including GPU and CPU processing as well as rendering. Each frontend is responsible for collecting sensor data, either from the OS or the web browser for real time applications, or from recorded or synthetic datasets for offline development. Depending on the frontend this may happen on one thread (javascript), two threads (desktop) or three threads (mobile native), and so designing a backend view framework that works across all of these combinations was critically important. The frontend is also responsible for displaying rendered results contained in a GPU buffer, as well as any text, charts, graphs, scene graphs, metadata, or other artifacts generated by the view.

Leveraging ImGui to Dive Deep Into Data

The Omniscope frontend for Web uses React for layout and 8th Wall's camera pipeline module framework to marshal data through views, but on desktop we use ImGui. ImGui is a lightweight, portable UI framework that is incredibly powerful. Developers use C++ with static state to implement expressive UIs declaratively in a way that's very similar to React. We use ImGui's built-in widgets and extensions for tables (latency profiles), trees (scene graph and metadata inspector), text boxes (debug information), checkboxes and sliders (algorithm tuning), and plots (time series, statistics). A key feature of ImGui is that it can directly display textures from a rendering framework. This means we can use the same C++ rendering engine on desktop, native mobile and web.

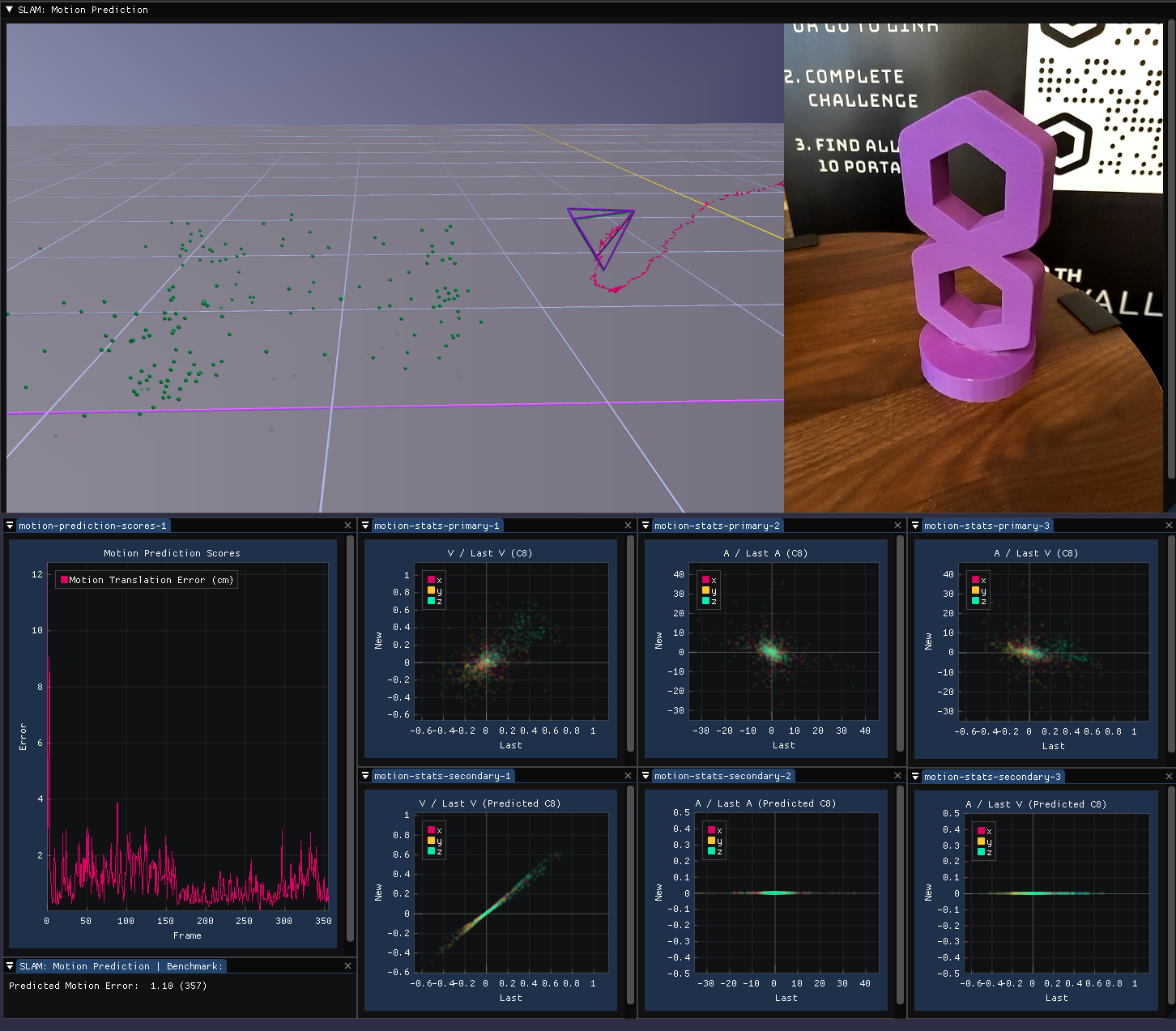

The Need for a C++ 3D Rendering Engine

For understanding SLAM algorithms, 3D visualization is critical. It's important for us to have a 3D engine that is portable to desktop, mobile native and the web, which means it needs to be written in C++. Traditionally, C++ 3D renderers have been part of game engines, which provide complex application runtimes. It can be difficult to separate the rendering components of things like Unity or Godot, and build them as lightweight, cross platform, standalone renderers. The closest thing we were able to find to a pure C++ renderer was Filament by Google, but it too was not built to separate the runtime from the renderer. In contrast, there are a number of excellent, standalone rendering engines on the Web, notably three.js and Babylon.js, but these would not be easy to port to ImGui or native mobile apps. To solve this problem and meet our expanding needs for excellent computer vision algorithm visualization, we built a new in-house rendering engine in C++ from scratch. Called the Object8 renderer, 8th Wall's rendering engine allows 3D scenes to be specified compactly in an ergonomic, declarative C++ API. In addition to supporting 3D scenes, it has APIs to directly position objects in clip space, which is useful for drawing a camera feed behind a scene, or for overlaying 2D content on top of a scene. A subscene referencing system allows the renderer to be used to generate complex layouts, and is even powerful enough to support the next generation of GPU shaders for computer vision at 8th Wall.

![]()

The results of the investments we have made into technology development at 8th Wall speak for themselves. With Release 16, WebAR experiences are now more stable than ever before with an even faster user experience. We are excited to keep building out the capabilities of WebAR and to empower 8th Wall's amazing developers with tools to create powerful experiences for the web.

Interested in joining our Engineering team on our journey to continue to deliver a world-class AR engine optimized for the browser? Apply via our Careers page or email me directly at nb@8thwall.com.